Did you know 1 in 5 people have a secret interest in music technology? Of course you didn’t - it’s not true. However, when you find a group of passionate people in your workplace who are into music technology - you have to do something about it.

The root of the problem

Everyone at Potato receives a monthly allocation of learning and development time, which they can use to grow their skillset. This can include experimenting with new tools, processes and methods, taking courses, or attending events and lectures. From time to time, small groups of people come together to collaboratively learn and explore shared areas of interest.

Since a few of us are interested in music tech and the Web Audio API, we decided to spend our learning time building a project together. After a few workshopping sessions, we settled on an MVP project of building a simple software synth instrument using the API. This allowed us to enhance our understanding of generating and shaping audio in the browser, while also serving as a fun hands-on demo to share our learnings with the wider team.

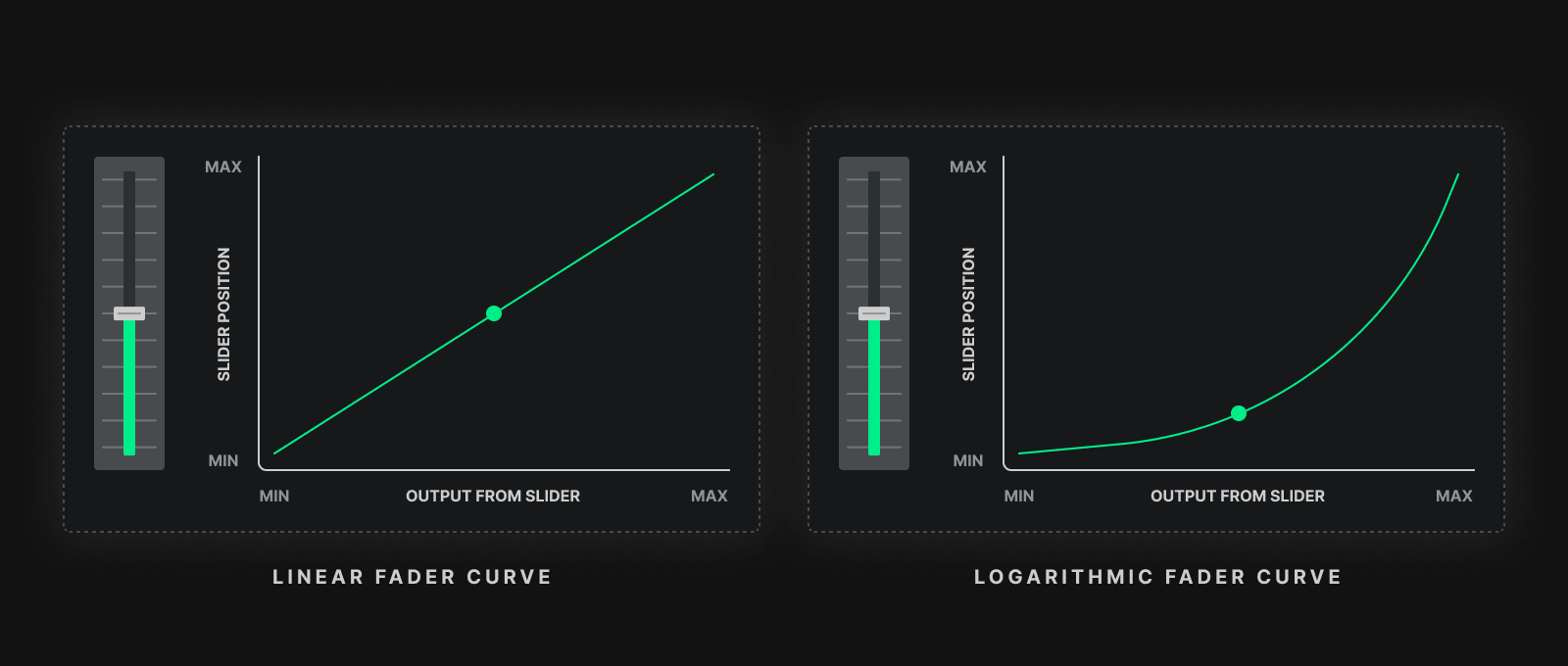

As we started to work on our project, we began to notice things we hadn’t considered before. We soon realised that there was a significant lack of resources for building UI for audio components. This was perhaps the most apparent when we noticed that most audio components on the web tend to use knobs and input ranges with the default linear response curve, which don’t lend themselves well for the purposes of generating and shaping sound, due to the way human ears perceive sound.

Synthesiser knobs, mixer faders - even volume dials on radios - utilise controls with a logarithmic response curve in order to better serve the human hearing range. How do we make an <input type=”range”> that conforms to this approach - and surely we aren’t the only ones that wanted to solve this challenge?

Around this time Potato Labs, a company-wide initiative to provide support and resources for fostering and growing ideas, opened up for proposals. Armed with the insight we had already gathered from the early experiments we had built, we decided to submit our idea for sponsorship: let’s create a library of Web Components that solves this challenge, and get the audio community involved.

What we’ve created

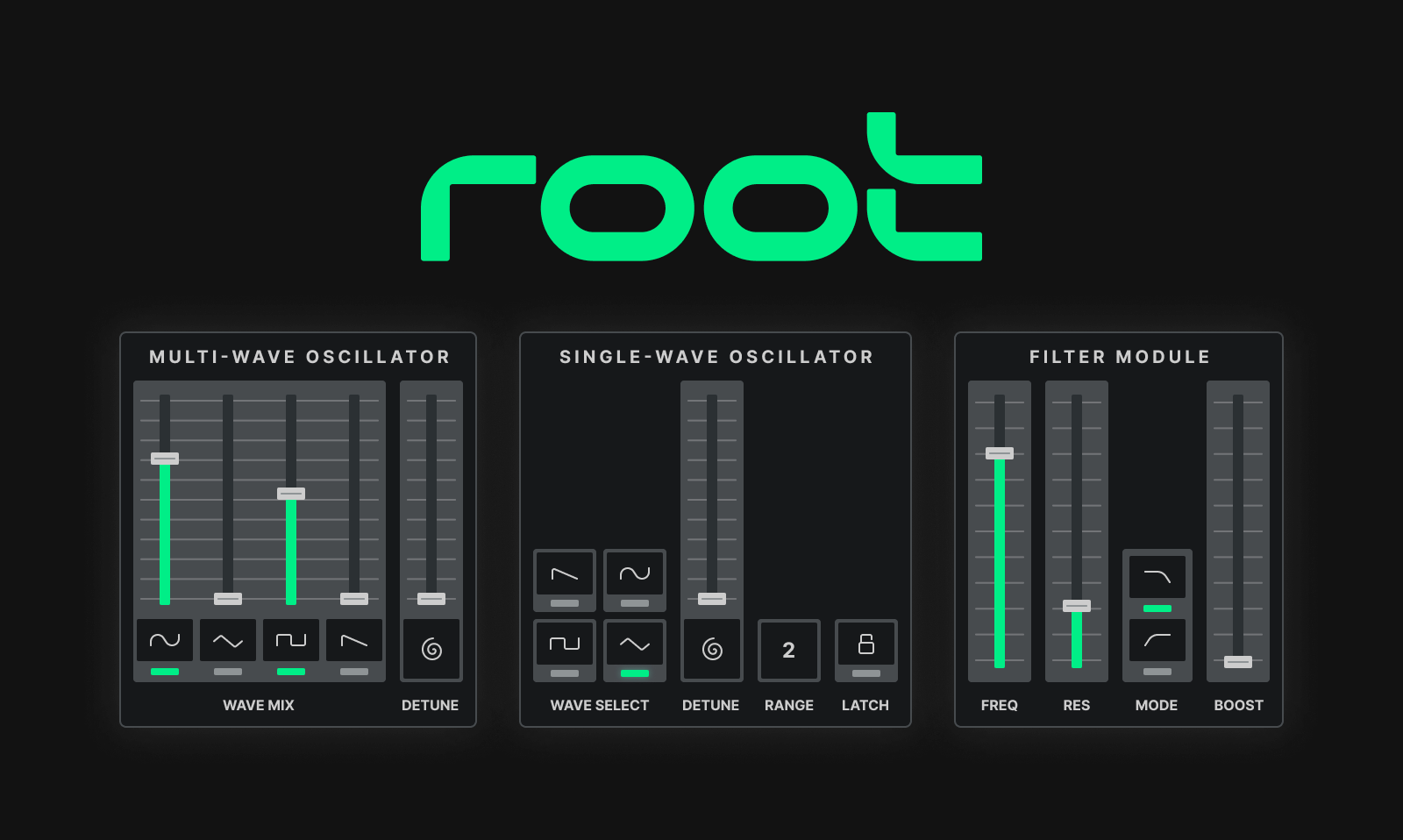

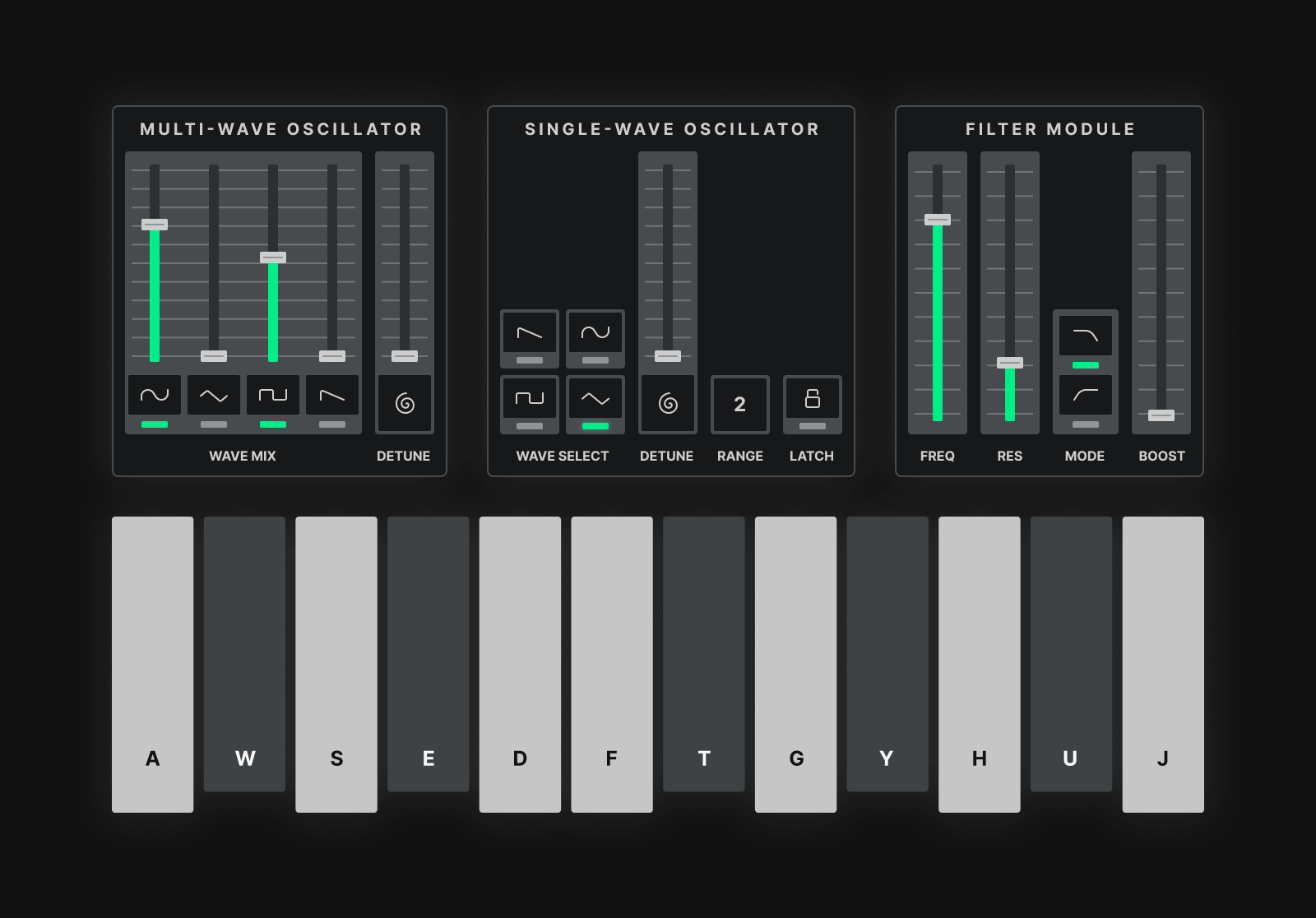

As part of our first release, we have created 8 bespoke audio components, which provide self-contained logic to generate and process audio via the Web Audio API, with an accessible UI. These components demonstrate the fundamental core aspects of synthesis; input interface, tone generation and tone shaping.

Keyboard

A simple input device that accepts mouse and keydown inputs, converting them to pitch information that can be fed into tone-generating modules.

MIDI

This component enables you to plug in a class-compliant MIDI-keyboard (or other MIDI device that sends pitch information) to your computer and control the keyboard module with it.

Single-wave oscillator

A basic oscillator based on the Web Audio API OscillatorNode which provides the functionality to generate a single waveform, coupled with a detune control with user-definable range and latch mechanism.

Multi-wave oscillator

This module provides the ability to generate and mix four classic waveshapes: sine, saw, square and triangle with a global detune control.

Dual filter

The filter module is based on Web Audio API's BiquadFilterNode. The filter can be switched between lowpass and hipass modes, with controls for cutoff frequency and resonance, with the rolloff set to +/-12db by default.

Connect

Connect is our version of a patch bay. Add as many components and modules as you like inside it and use id, sendTo and recieveFrom to route audio signal between them.

Fader

The UI component for a fader, featuring support for three response curves: linear, logarithmic and goldrenratio. This component is used in the construction of the filter and the single-wave and multi-wave oscillator modules, and provides a great utility component for other components in the future.

Display

This is a multi-functional UI component to display controls in the modules. It offers the optional ability to toggle between states and can house an svg icon or a text label. This component is used widely across the filter and the single-wave and multi-wave oscillator modules to switch between waveforms and other user-controlled parameters.

The road ahead

Our goal with Root is to provide building blocks that enable people to create various web-based audio applications, such as synthesizers, tuners, sequencers, audio effects, and visualisers. Although our first release lays the groundwork for the wider audio community to get involved, we still have a lot of work to do to achieve our ambitions. We have identified the following three core areas on which we will focus next.

Components

We have barely scratched the surface of all the components the Web Audio API has to offer and we have a lot of ideas we want to explore further, such as creating a graphical EQ, an envelope generator and other effects such as a compressor.

Framework compatibility

At the moment, Root is built on Lit. In the future we want root users to be able to use the library in projects built using other frameworks, such as Vue, React and Next.

Customisation & theming

Whilst the first release already includes the fader and display UI component as building blocks for further modules, we want to extend the ability to select what parts of any given component you would like to use. We also want to provide different themes alongside the default theme, and the ability to create your own.

See (and hear) Root in action

How you can contribute

If you share our passion for music technology and excitement for the possibilities of Web Audio API, we welcome you to get involved and contribute to Root’s ongoing development!

Visit our GitHub page for more information and get in touch with us at opensource@potatolondon.com

Special thanks

Potato Labs

Taavi Kelle, Bruno Belcastro & Paolo Chillari

For all things math

Adam Alton & Michael Strutt